Abstract:

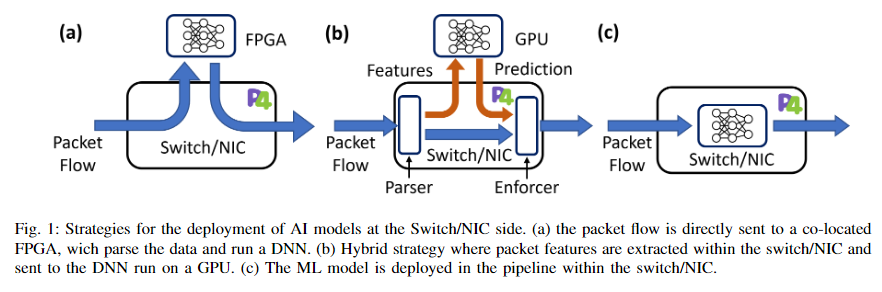

In-network function offloading represents a key enabler of the SDN-based data plane programmability to enhance network operation and awareness while speeding up applications and reducing the energy footprint. The offload of network functions exploiting machine learning and artificial intelligence has been recently considered with intermediate solutions such as feature extraction acceleration and mixed architectures including AI-specific platforms (e.g., GPU, FPGA). Indeed, the P4 language enables the programmability of deep neural networks inside the pipelines of both software and hardware switches and NICs. However, programmable hardware pipeline chipsets suffer from significant computing capability limitations (e.g., missing arithmetic logic units, limited and slow stateful registers) preventing the plain programmability of a deep neural network (DNN) operating at wirespeed. This paper proposes an innovative knowledge distillation technique that maps a DNN into a cascade of lookup tables (i.e., flow tables) with limited entry size. The proposed mapping avoids stateful elements and maths operators, whose requirement prevented the deployment of DNNs within hardware switches up to now. The evaluation is carried out considering a cyber security use case targeting a DDoS mitigator network function, showing negligible impact due to the lossless mapping reduction and feature quantization.

https://zenodo.org/records/11029807